Cześć,

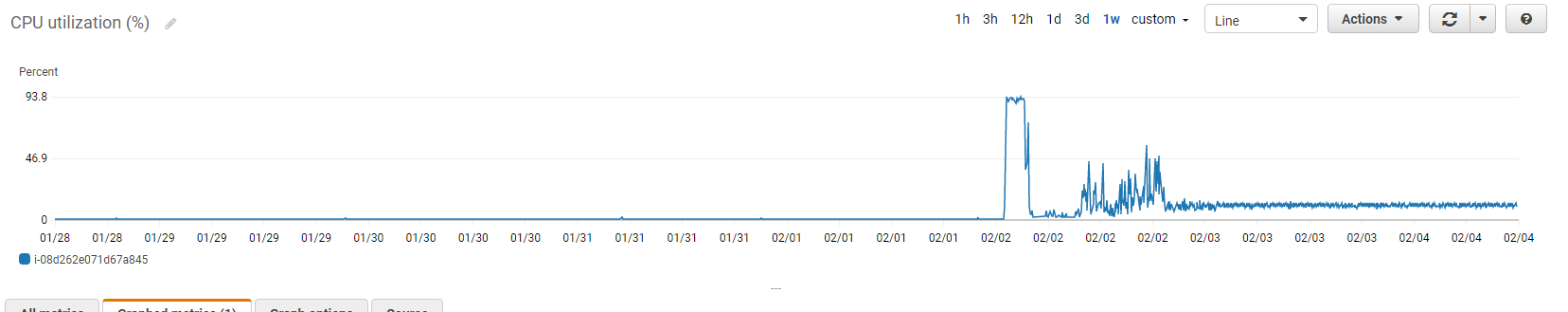

na AWS Free Tier mam uruchomioną instancję EC2 z Debianem, a nim deployuję sobie appkę dockerem. Używam docker-compose do uruchomienia trzech obrazów: apache, javy ze springiem i mongodb. Z aplikacji korzystam w sumie sam, pewnego dnia przestała działać, a na awsie zobaczyłem coś takiego:

po czym AWS ubił instancję. W logach widzę coś takiego:

2021-02-02 06:09:38.353 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Exception in monitor thread while connecting to server mongodb:27017

com.mongodb.MongoSocketReadTimeoutException: Timeout while receiving message

at com.mongodb.internal.connection.InternalStreamConnection.translateReadException(InternalStreamConnection.java:631) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveMessageWithAdditionalTimeout(InternalStreamConnection.java:516) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveCommandMessageResponse(InternalStreamConnection.java:356) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receive(InternalStreamConnection.java:305) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.lookupServerDescription(DefaultServerMonitor.java:218) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.run(DefaultServerMonitor.java:144) ~[mongodb-driver-core-4.1.1.jar!/:na]

at java.base/java.lang.Thread.run(Thread.java:830) ~[na:na]

Caused by: java.net.SocketTimeoutException: Read timed out

at java.base/sun.nio.ch.NioSocketImpl.timedRead(NioSocketImpl.java:284) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl.implRead(NioSocketImpl.java:310) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl.read(NioSocketImpl.java:351) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl$1.read(NioSocketImpl.java:802) ~[na:na]

at java.base/java.net.Socket$SocketInputStream.read(Socket.java:937) ~[na:na]

at com.mongodb.internal.connection.SocketStream.read(SocketStream.java:109) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.read(SocketStream.java:131) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveResponseBuffers(InternalStreamConnection.java:648) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveMessageWithAdditionalTimeout(InternalStreamConnection.java:513) ~[mongodb-driver-core-4.1.1.jar!/:na]

... 5 common frames omitted

2021-02-02 06:10:20.440 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.connection : Opened connection [connectionId{localValue:4, serverValue:4}] to mongodb:27017

2021-02-02 06:10:21.564 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.connection : Opened connection [connectionId{localValue:5, serverValue:5}] to mongodb:27017

2021-02-02 06:10:23.457 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Monitor thread successfully connected to server with description ServerDescription{address=mongodb:27017, type=STANDALONE, state=CONNECTED, ok=true, minWireVersion=0, maxWireVersion=8, maxDocumentSize=16777216, logicalSessionTimeoutMinutes=30, roundTripTimeNanos=14723805491}

2021-02-02 06:17:16.885 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Exception in monitor thread while connecting to server mongodb:27017

com.mongodb.MongoSocketReadTimeoutException: Timeout while receiving message

at com.mongodb.internal.connection.InternalStreamConnection.translateReadException(InternalStreamConnection.java:631) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveMessageWithAdditionalTimeout(InternalStreamConnection.java:516) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveCommandMessageResponse(InternalStreamConnection.java:356) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receive(InternalStreamConnection.java:305) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.lookupServerDescription(DefaultServerMonitor.java:218) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.run(DefaultServerMonitor.java:144) ~[mongodb-driver-core-4.1.1.jar!/:na]

at java.base/java.lang.Thread.run(Thread.java:830) ~[na:na]

Caused by: java.net.SocketTimeoutException: Read timed out

at java.base/sun.nio.ch.NioSocketImpl.timedRead(NioSocketImpl.java:284) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl.implRead(NioSocketImpl.java:310) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl.read(NioSocketImpl.java:351) ~[na:na]

at java.base/sun.nio.ch.NioSocketImpl$1.read(NioSocketImpl.java:802) ~[na:na]

at java.base/java.net.Socket$SocketInputStream.read(Socket.java:937) ~[na:na]

at com.mongodb.internal.connection.SocketStream.read(SocketStream.java:109) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.read(SocketStream.java:131) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveResponseBuffers(InternalStreamConnection.java:648) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.receiveMessageWithAdditionalTimeout(InternalStreamConnection.java:513) ~[mongodb-driver-core-4.1.1.jar!/:na]

... 5 common frames omitted

2021-02-02 06:30:31.632 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Exception in monitor thread while connecting to server mongodb:27017

com.mongodb.MongoSocketException: mongodb: Temporary failure in name resolution

at com.mongodb.ServerAddress.getSocketAddresses(ServerAddress.java:211) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.initializeSocket(SocketStream.java:75) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.open(SocketStream.java:65) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.open(InternalStreamConnection.java:143) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.lookupServerDescription(DefaultServerMonitor.java:188) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.run(DefaultServerMonitor.java:144) ~[mongodb-driver-core-4.1.1.jar!/:na]

at java.base/java.lang.Thread.run(Thread.java:830) ~[na:na]

Caused by: java.net.UnknownHostException: mongodb: Temporary failure in name resolution

at java.base/java.net.Inet4AddressImpl.lookupAllHostAddr(Native Method) ~[na:na]

at java.base/java.net.InetAddress$PlatformNameService.lookupAllHostAddr(InetAddress.java:930) ~[na:na]

at java.base/java.net.InetAddress.getAddressesFromNameService(InetAddress.java:1499) ~[na:na]

at java.base/java.net.InetAddress$NameServiceAddresses.get(InetAddress.java:849) ~[na:na]

at java.base/java.net.InetAddress.getAllByName0(InetAddress.java:1489) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(InetAddress.java:1348) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(InetAddress.java:1282) ~[na:na]

at com.mongodb.ServerAddress.getSocketAddresses(ServerAddress.java:203) ~[mongodb-driver-core-4.1.1.jar!/:na]

... 6 common frames omitted

2021-02-02 06:44:44.066 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Exception in monitor thread while connecting to server mongodb:27017

com.mongodb.MongoSocketException: mongodb

at com.mongodb.ServerAddress.getSocketAddresses(ServerAddress.java:211) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.initializeSocket(SocketStream.java:75) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.SocketStream.open(SocketStream.java:65) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.InternalStreamConnection.open(InternalStreamConnection.java:143) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.lookupServerDescription(DefaultServerMonitor.java:188) ~[mongodb-driver-core-4.1.1.jar!/:na]

at com.mongodb.internal.connection.DefaultServerMonitor$ServerMonitorRunnable.run(DefaultServerMonitor.java:144) ~[mongodb-driver-core-4.1.1.jar!/:na]

at java.base/java.lang.Thread.run(Thread.java:830) ~[na:na]

Caused by: java.net.UnknownHostException: mongodb

at java.base/java.net.InetAddress$CachedAddresses.get(InetAddress.java:798) ~[na:na]

at java.base/java.net.InetAddress$NameServiceAddresses.get(InetAddress.java:884) ~[na:na]

at java.base/java.net.InetAddress.getAllByName0(InetAddress.java:1489) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(InetAddress.java:1348) ~[na:na]

at java.base/java.net.InetAddress.getAllByName(InetAddress.java:1282) ~[na:na]

at com.mongodb.ServerAddress.getSocketAddresses(ServerAddress.java:203) ~[mongodb-driver-core-4.1.1.jar!/:na]

... 6 common frames omitted

2021-02-02 09:19:19.732 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.connection : Opened connection [connectionId{localValue:75, serverValue:6}] to mongodb:27017

2021-02-02 09:19:19.732 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.connection : Opened connection [connectionId{localValue:76, serverValue:7}] to mongodb:27017

2021-02-02 09:19:19.732 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Monitor thread successfully connected to server with description ServerDescription{address=mongodb:27017, type=STANDALONE, state=CONNECTED, ok=true, minWireVersion=0, maxWireVersion=8, maxDocumentSize=16777216, logicalSessionTimeoutMinutes=30, roundTripTimeNanos=339696023}

2021-02-02 09:21:45.624 INFO 1 --- [}-mongodb:27017] org.mongodb.driver.cluster : Exception in monitor thread while connecting to server mongodb:27017

w mongodb

, after globalLock: 176, after locks: 215, after logicalSessionRecordCache: 263, after network: 263, after opLatencies: 273, after opReadConcernCounters: 283, after opcounters: 293, after opcountersRepl: 293, after oplogTruncation: 477, after repl: 477, after security: 487, after storageEngine: 563, after tcmalloc: 616, after trafficRecording: 626, after transactions: 655, after transportSecurity: 655, after twoPhaseCommitCoordinator: 681, after wiredTiger: 1009, at end: 1533 }

2021-02-02T06:11:01.868+0000 I COMMAND [conn5] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 151ms

2021-02-02T06:11:04.238+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 24, after asserts: 108, after connections: 129, after electionMetrics: 184, after extra_info: 194, after flowControl: 218, after freeMonitoring: 253, after globalLock: 253, after locks: 275, after logicalSessionRecordCache: 309, after network: 406, after opLatencies: 406, after opReadConcernCounters: 416, after opcounters: 416, after opcountersRepl: 416, after oplogTruncation: 676, after repl: 748, after security: 758, after storageEngine: 876, after tcmalloc: 965, after trafficRecording: 975, after transactions: 998, after transportSecurity: 998, after twoPhaseCommitCoordinator: 1025, after wiredTiger: 1529, at end: 1677 }

2021-02-02T06:11:09.614+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 324ms

2021-02-02T06:11:11.094+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 44, after asserts: 129, after connections: 139, after electionMetrics: 209, after extra_info: 233, after flowControl: 256, after freeMonitoring: 304, after globalLock: 304, after locks: 314, after logicalSessionRecordCache: 382, after network: 429, after opLatencies: 429, after opReadConcernCounters: 439, after opcounters: 449, after opcountersRepl: 449, after oplogTruncation: 794, after repl: 933, after security: 975, after storageEngine: 1143, after tcmalloc: 1237, after trafficRecording: 1247, after transactions: 1279, after transportSecurity: 1289, after twoPhaseCommitCoordinator: 1318, after wiredTiger: 1693, at end: 1827 }

2021-02-02T06:11:15.777+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 84, after asserts: 171, after connections: 181, after electionMetrics: 240, after extra_info: 262, after flowControl: 272, after freeMonitoring: 308, after globalLock: 318, after locks: 343, after logicalSessionRecordCache: 400, after network: 474, after opLatencies: 474, after opReadConcernCounters: 474, after opcounters: 474, after opcountersRepl: 474, after oplogTruncation: 611, after repl: 621, after security: 621, after storageEngine: 715, after tcmalloc: 751, after trafficRecording: 753, after transactions: 761, after transportSecurity: 771, after twoPhaseCommitCoordinator: 792, after wiredTiger: 945, at end: 1129 }

2021-02-02T06:11:19.094+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 35, after asserts: 112, after connections: 133, after electionMetrics: 155, after extra_info: 165, after flowControl: 165, after freeMonitoring: 213, after globalLock: 223, after locks: 272, after logicalSessionRecordCache: 357, after network: 459, after opLatencies: 459, after opReadConcernCounters: 469, after opcounters: 479, after opcountersRepl: 479, after oplogTruncation: 721, after repl: 855, after security: 883, after storageEngine: 1012, after tcmalloc: 1089, after trafficRecording: 1099, after transactions: 1109, after transportSecurity: 1119, after twoPhaseCommitCoordinator: 1129, after wiredTiger: 1583, at end: 1798 }

2021-02-02T06:11:21.120+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 296ms

2021-02-02T06:11:21.205+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 34, after asserts: 105, after connections: 115, after electionMetrics: 151, after extra_info: 161, after flowControl: 171, after freeMonitoring: 206, after globalLock: 206, after locks: 240, after logicalSessionRecordCache: 279, after network: 315, after opLatencies: 315, after opReadConcernCounters: 329, after opcounters: 329, after opcountersRepl: 329, after oplogTruncation: 471, after repl: 471, after security: 481, after storageEngine: 576, after tcmalloc: 614, after trafficRecording: 624, after transactions: 634, after transportSecurity: 634, after twoPhaseCommitCoordinator: 644, after wiredTiger: 997, at end: 1148 }

2021-02-02T06:11:24.896+0000 I COMMAND [conn5] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 215ms

2021-02-02T06:11:25.814+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 18, after asserts: 67, after connections: 67, after electionMetrics: 114, after extra_info: 124, after flowControl: 134, after freeMonitoring: 190, after globalLock: 216, after locks: 259, after logicalSessionRecordCache: 341, after network: 392, after opLatencies: 392, after opReadConcernCounters: 402, after opcounters: 412, after opcountersRepl: 412, after oplogTruncation: 502, after repl: 502, after security: 512, after storageEngine: 589, after tcmalloc: 648, after trafficRecording: 648, after transactions: 670, after transportSecurity: 670, after twoPhaseCommitCoordinator: 680, after wiredTiger: 1116, at end: 1660 }

2021-02-02T06:11:31.426+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 11, after asserts: 54, after connections: 64, after electionMetrics: 96, after extra_info: 106, after flowControl: 116, after freeMonitoring: 158, after globalLock: 158, after locks: 184, after logicalSessionRecordCache: 226, after network: 261, after opLatencies: 261, after opReadConcernCounters: 271, after opcounters: 271, after opcountersRepl: 271, after oplogTruncation: 415, after repl: 415, after security: 425, after storageEngine: 533, after tcmalloc: 569, after trafficRecording: 569, after transactions: 579, after transportSecurity: 579, after twoPhaseCommitCoordinator: 600, after wiredTiger: 842, at end: 1066 }

2021-02-02T06:11:33.084+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 344ms

2021-02-02T06:11:34.634+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 19, after asserts: 114, after connections: 114, after electionMetrics: 198, after extra_info: 226, after flowControl: 236, after freeMonitoring: 302, after globalLock: 302, after locks: 328, after logicalSessionRecordCache: 428, after network: 438, after opLatencies: 448, after opReadConcernCounters: 468, after opcounters: 478, after opcountersRepl: 478, after oplogTruncation: 595, after repl: 595, after security: 605, after storageEngine: 640, after tcmalloc: 690, after trafficRecording: 690, after transactions: 700, after transportSecurity: 700, after twoPhaseCommitCoordinator: 710, after wiredTiger: 1015, at end: 1275 }

2021-02-02T06:11:38.953+0000 I COMMAND [conn5] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 337ms

2021-02-02T06:11:39.681+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 7, after asserts: 10, after connections: 31, after electionMetrics: 72, after extra_info: 72, after flowControl: 93, after freeMonitoring: 124, after globalLock: 134, after locks: 144, after logicalSessionRecordCache: 181, after network: 219, after opLatencies: 219, after opReadConcernCounters: 240, after opcounters: 240, after opcountersRepl: 240, after oplogTruncation: 440, after repl: 450, after security: 472, after storageEngine: 579, after tcmalloc: 691, after trafficRecording: 701, after transactions: 727, after transportSecurity: 737, after twoPhaseCommitCoordinator: 759, after wiredTiger: 1098, at end: 1420 }

2021-02-02T06:11:44.682+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 253ms

2021-02-02T06:11:45.748+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 17, after asserts: 31, after connections: 31, after electionMetrics: 71, after extra_info: 71, after flowControl: 71, after freeMonitoring: 96, after globalLock: 96, after locks: 128, after logicalSessionRecordCache: 166, after network: 201, after opLatencies: 211, after opReadConcernCounters: 221, after opcounters: 221, after opcountersRepl: 221, after oplogTruncation: 321, after repl: 321, after security: 331, after storageEngine: 400, after tcmalloc: 443, after trafficRecording: 443, after transactions: 469, after transportSecurity: 471, after twoPhaseCommitCoordinator: 497, after wiredTiger: 1023, at end: 1510 }

2021-02-02T06:11:51.055+0000 I COMMAND [LogicalSessionCacheRefresh] command config.system.sessions command: listIndexes { listIndexes: "system.sessions", cursor: {}, $db: "config" } numYields:0 reslen:307 locks:{ ReplicationStateTransition: { acquireCount: { w: 1 } }, Global: { acquireCount: { r: 1 } }, Database: { acquireCount: { r: 1 } }, Collection: { acquireCount: { r: 1 } }, Mutex: { acquireCount: { r: 1 } } } storage:{} protocol:op_msg 782ms

2021-02-02T06:11:51.055+0000 I COMMAND [LogicalSessionCacheReap] command config.system.sessions command: listIndexes { listIndexes: "system.sessions", cursor: {}, $db: "config" } numYields:0 reslen:307 locks:{ ReplicationStateTransition: { acquireCount: { w: 1 } }, Global: { acquireCount: { r: 1 } }, Database: { acquireCount: { r: 1 } }, Collection: { acquireCount: { r: 1 } }, Mutex: { acquireCount: { r: 1 } } } storage:{} protocol:op_msg 782ms

2021-02-02T06:11:53.404+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 76, after asserts: 147, after connections: 147, after electionMetrics: 175, after extra_info: 175, after flowControl: 185, after freeMonitoring: 214, after globalLock: 214, after locks: 246, after logicalSessionRecordCache: 287, after network: 320, after opLatencies: 330, after opReadConcernCounters: 340, after opcounters: 350, after opcountersRepl: 350, after oplogTruncation: 503, after repl: 503, after security: 513, after storageEngine: 770, after tcmalloc: 929, after trafficRecording: 1083, after transactions: 1115, after transportSecurity: 1176, after twoPhaseCommitCoordinator: 1222, after wiredTiger: 2716, at end: 3145 }

2021-02-02T06:11:54.280+0000 I COMMAND [conn5] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 829ms

2021-02-02T06:11:56.479+0000 I COMMAND [LogicalSessionCacheReap] command config.transactions command: find { find: "transactions", filter: { lastWriteDate: { $lt: new Date(1612244511791) } }, projection: { _id: 1 }, sort: { _id: 1 }, $db: "config" } planSummary: EOF keysExamined:0 docsExamined:0 cursorExhausted:1 numYields:0 nreturned:0 reslen:108 locks:{ ReplicationStateTransition: { acquireCount: { w: 1 } }, Global: { acquireCount: { r: 1 } }, Database: { acquireCount: { r: 1 } }, Collection: { acquireCount: { r: 2 } }, Mutex: { acquireCount: { r: 1 } } } storage:{} protocol:op_msg 2605ms

2021-02-02T06:11:59.209+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 811ms

2021-02-02T06:12:00.623+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 145, after asserts: 782, after connections: 983, after electionMetrics: 1115, after extra_info: 1182, after flowControl: 1219, after freeMonitoring: 1317, after globalLock: 1378, after locks: 1448, after logicalSessionRecordCache: 1553, after network: 1679, after opLatencies: 1708, after opReadConcernCounters: 1750, after opcounters: 1760, after opcountersRepl: 1760, after oplogTruncation: 2134, after repl: 2292, after security: 2349, after storageEngine: 2648, after tcmalloc: 2911, after trafficRecording: 3062, after transactions: 3089, after transportSecurity: 3099, after twoPhaseCommitCoordinator: 3125, after wiredTiger: 3536, at end: 3676 }

2021-02-02T06:12:03.331+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 26, after asserts: 84, after connections: 94, after electionMetrics: 121, after extra_info: 131, after flowControl: 153, after freeMonitoring: 186, after globalLock: 186, after locks: 214, after logicalSessionRecordCache: 276, after network: 334, after opLatencies: 334, after opReadConcernCounters: 344, after opcounters: 354, after opcountersRepl: 354, after oplogTruncation: 431, after repl: 431, after security: 431, after storageEngine: 473, after tcmalloc: 500, after trafficRecording: 500, after transactions: 510, after transportSecurity: 510, after twoPhaseCommitCoordinator: 531, after wiredTiger: 864, at end: 1040 }

2021-02-02T06:12:06.685+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 17, after asserts: 68, after connections: 78, after electionMetrics: 113, after extra_info: 123, after flowControl: 143, after freeMonitoring: 175, after globalLock: 175, after locks: 200, after logicalSessionRecordCache: 240, after network: 291, after opLatencies: 291, after opReadConcernCounters: 301, after opcounters: 311, after opcountersRepl: 311, after oplogTruncation: 488, after repl: 498, after security: 508, after storageEngine: 673, after tcmalloc: 767, after trafficRecording: 777, after transactions: 822, after transportSecurity: 832, after twoPhaseCommitCoordinator: 865, after wiredTiger: 1251, at end: 1417 }

2021-02-02T06:12:09.890+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 51, after asserts: 196, after connections: 222, after electionMetrics: 272, after extra_info: 282, after flowControl: 302, after freeMonitoring: 331, after globalLock: 341, after locks: 366, after logicalSessionRecordCache: 388, after network: 422, after opLatencies: 422, after opReadConcernCounters: 422, after opcounters: 422, after opcountersRepl: 422, after oplogTruncation: 543, after repl: 543, after security: 543, after storageEngine: 642, after tcmalloc: 684, after trafficRecording: 684, after transactions: 694, after transportSecurity: 694, after twoPhaseCommitCoordinator: 704, after wiredTiger: 1141, at end: 1552 }

2021-02-02T06:12:11.166+0000 I COMMAND [conn5] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 836ms

2021-02-02T06:12:12.552+0000 I COMMAND [conn4] command admin.$cmd command: isMaster { ismaster: 1, $db: "admin" } numYields:0 reslen:242 locks:{} protocol:op_msg 934ms

2021-02-02T06:12:15.633+0000 I COMMAND [ftdc] serverStatus was very slow: { after basic: 80, after asserts: 298, after connections: 308, after electionMetrics: 430, after extra_info: 879, after flowControl: 1132, after freeMonitoring: 1509, after globalLock: 1678, after locks: 1728, after logicalSessionRecordCache: 1813, after network: 1916, after opLatencies: 1916, after opReadConcernCounters: 1926, after opcounters: 1926, after opcountersRepl: 1926, after oplogTruncation: 2238, after repl: 2341, after security: 2368, after storageEngine: 2517, after tcmalloc: 2570, after trafficRecording: 2570, after transactions: 2580, after transportSecurity: 2614, after twoPhaseCommitCoordinator: 2624, after wiredTiger: 2921, at end: 3057 }

Niestety, docker logs nie zwrociło mi nic więcej co się wcześniej działo w mongo.

Czy ktoś ma pomysł, co się mogło wydarzyć? Za kilka miesięcy kończy mi się Free Tier i taka akcja może nieźle kosztować, a mi jedynie co przychodzi do głowy to update do nowszych wersji springa i mongo.

Wysypało się to na Spring Boot v. 2.4.1 i MongoDB 4.2.